The recent AI boom, fueled by Large Language Models (LLMs) like ChatGPT, happened because these models became massive – billions of parameters – and were trained on huge computers with multiple GPUs and hundreds of gigabytes of memory.

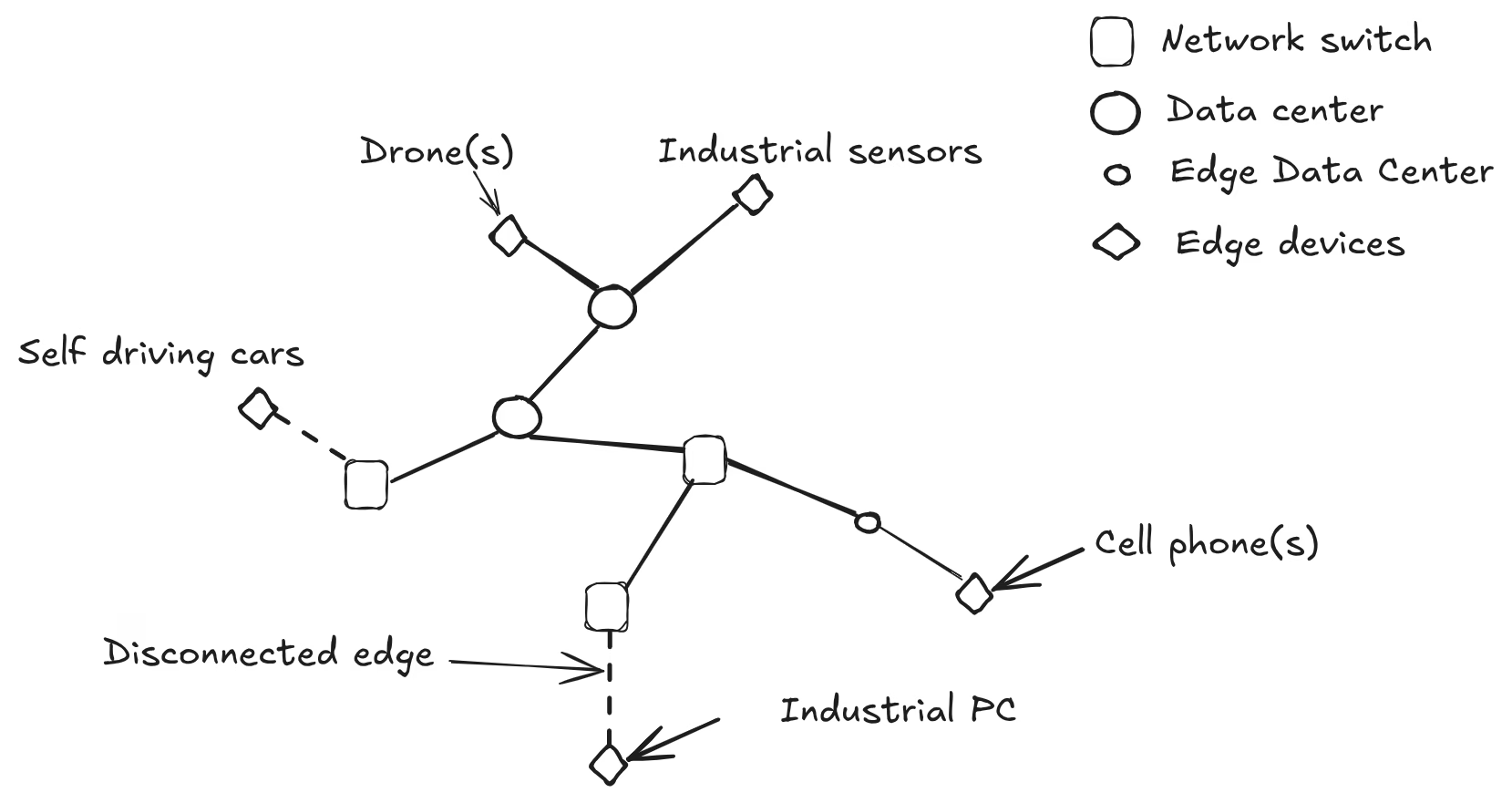

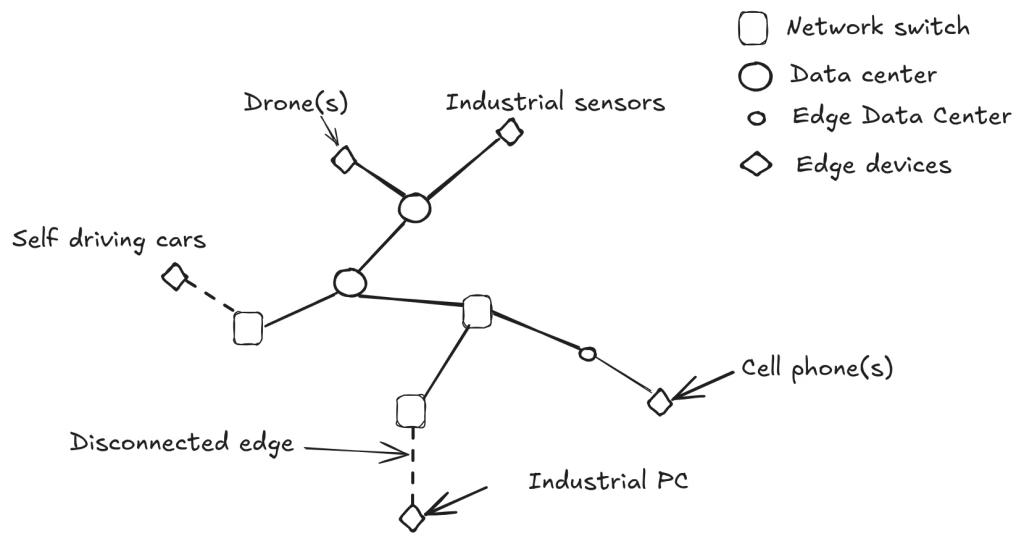

Because they require so much computing power, they typically run in data centers (a.k.a. the cloud).

For many uses, this works perfectly – like chatting with an AI or processing documents, where a little delay is no problem.

Latency: Why Speed Matters

Latency is the time between something happening and the AI giving you its answer.

- Example: If a camera sends an image to an AI, latency is the delay between capturing the photo and getting the AI’s interpretation.

- Why it matters: In manufacturing, detecting a defect even a second too late can slow or stop a production line.

In self-driving cars, drones, or robots, even small delays can cause mistakes or accidents.

In security systems, slow detection can delay a response to an incident.

When AI runs in the cloud, sending data back and forth often adds several seconds of delay. Some customers we spoke with reported up to 5 seconds of extra latency — unacceptable for real-time decisions.

The Privacy Problem

Latency isn’t the only issue.

Some organizations don’t want sensitive data leaving their premises. Sending data to the cloud raises privacy and compliance concerns – and recent headlines about AI privacy haven’t helped (see this example).

The Solution: AI at the Edge

Instead of running AI in far-away data centers, we can run it locally, on devices close to where the data is generated.

These are called edge devices – because they sit at the “edge” of a network, near the action.

Edge Devices vs. Edge Data Centers

- Edge devices: Physical devices (from powerful computers to small sensors) that can run AI on-site.

- Edge data centers: Smaller data centers located near customers, reducing latency by processing data closer to where it’s needed.

These are often used for things like video streaming or high-frequency trading.

Types of Edge Devices

- High-end:

- Comparable to a PC or small server.

- Powerful GPUs with large memory (tens of GB).

- Typically need fans for cooling.

- Comparable to a PC or small server.

- Mid-range:

- Devices like NVIDIA Jetson modules (ARM-based CPU + GPU).

- Variety of sizes and performance levels.

- Usually need wall power.

- Devices like NVIDIA Jetson modules (ARM-based CPU + GPU).

- Low-end:

- Embedded systems or microcontrollers.

- Found in phones, drones, or industrial sensors.

- May run on small batteries for days.

- Have very limited memory (tens of MB) and run small AI models.

- Embedded systems or microcontrollers.

Disconnected Edge

In some cases, devices can’t (or shouldn’t) connect to the internet – either for privacy or because the connection isn’t reliable.

These disconnected (or offline) edge devices still need occasional updates, often through a temporary connection to another device.

Want us to go deeper into edge AI devices, architectures, or real-world examples?

Drop a comment – we’ll cover it in a future post.

Further Reading:

2 Responses

Great post! I also think Edge AI can be a big win for cost savings, especially in image-heavy applications. By handling data locally, you avoid a lot of the ongoing expenses tied to data transfer and cloud processing costs. Also helps you reduce your cloud footprint. This comes in addition to performace, reliability and privacy benefits that you already mentioned.

Also, local models can be fine-tuned for application-specific needs, resulting in smaller models, providing better inference performance, compared to much larger generic models.

What type of hardware do you think is most effective for edge deployments? I see many Soc vendors adding neural processing engines to their chips, but I think there is lot of room for improvement. Have you came across a solution that is cheap and power-efficient?

Those are all great points.

In terms of hardware, there is a lot of small form factor but still plug-in Jetson based devices being built. I believe there is a lot that can be done with them.

There is a also a whole spectrum of even cheaper and more efficient hardware. SiMa.ai is doing some good work in this space. Also players like Qualcomm and Apple are active in this space to enable cell phones to have sufficient power to run decent sized models. There is OpenMV (https://openmv.io/collections/all-products) – I mention them because I ran across the founder. There are just too many to name.

Google has an active group working on the software side. You can find more about their efforts at https://io.google/2025/explore/technical-session-4.