Over the last few decades, computer vision has evolved rapidly — yet most models still operate with blinders on. They’re trained for narrow tasks, brittle in real-world conditions, and disconnected from language or context.

Vision-Language Models (VLMs) change that. These models can interpret what they see and understand natural language — allowing them to reason, explain, and adapt across domains.

In a previous post, we showed how VLMs can make inferences that were impossible with earlier vision systems. This post walks through the steps to deploy VLMs on the edge — where intelligence needs to run in real time, close to the physical world.

Step 0: Decide if VLMs Is Right for Your Use Case

Before deploying, ask yourself: Does your application require reasoning that combines vision and language?

VLMs are ideal when simple image recognition isn’t enough — when you need a model that can understand, explain, and act on what it sees. Unlike traditional vision models trained for narrow tasks (e.g., object detection or OCR), VLMs like NVILA can extract text, localize objects, and reason about scenes — all at once.

VLMs vs. LLMs: LLMs operate in text only. They’re great for summarization and dialog but lack visual grounding.

VLMs vs. classical vision models: Traditional models are fast and task-specific, but brittle and hard to adapt. VLMs are promptable and general-purpose, making them far more flexible.

If your application requires solving multiple visual tasks or making decisions based on nuanced, high-level reasoning, a VLM is probably the right fit. VLMs excel at interpreting images and video through the lens of complex textual instructions — whether it’s enforcing safety policies, identifying abstract defects or foreign objects, or detecting subtle compliance issues. They bring a layer of contextual understanding that traditional vision models simply can’t match, making them ideal for dynamic, real-world environments.

Step 1: Choose the Right Model and Hardware

Once you’ve decided a VLM is the right tool, the next step is selecting a model — and hardware that can run it efficiently on the edge.

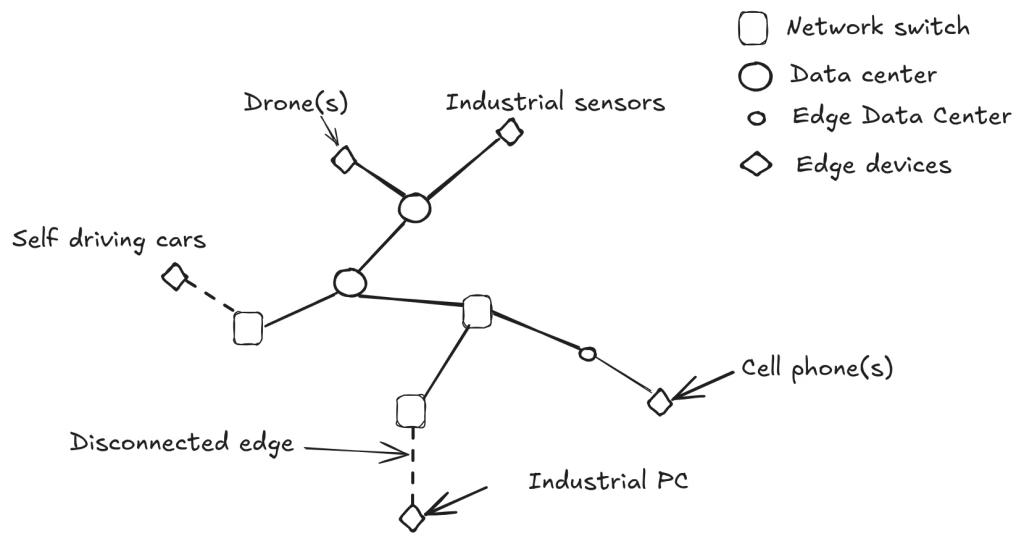

Think of edge-ready VLMs as compact foundational models: trained on large multi-modal datasets, but optimized for real-time, low-power inference. Compared to cloud-scale models, they offer lower power and memory requirements, faster inference, and built-in data privacy (no cloud upload needed).

Your hardware should match the model’s requirements and the deployment context. Key considerations include: Model size and VRAM, Latency and throughput needs, Power/thermal limits, cost vs. performance trade-offs.

Models like NVILA 1.5B, 3B, 8B, and quantized NVILA 15B can run on industrial devices like a Siemens IPC with an NVIDIA L4 GPU (24GB) — delivering robust performance at the edge. Other model families, like LLaVA, Gemma, and Qwen offer models that can be deployed on the edge (see HuggingFace’s blog post on VLMs).

Whether you’re deploying on a drone, a robot arm, or a factory node, selecting the right model–hardware pair is critical to ensuring smooth, reliable VLM performance.

Step 2: Evaluate and Benchmark the Model

VLMs are powerful — but not infallible. While they offer strong zero-shot performance out of the box, they’re still black-box systems that can hallucinate or misinterpret inputs in unpredictable ways. That’s why rigorous, application-specific evaluation is critical before deployment. A model that looks good in a demo may not be reliable in production. Every application has different tolerances for: False positives vs. false negatives, ambiguous outputs and their own tradeoffs for Latency vs. accuracy.While results from public benchmarks can give a good idea about the VLMs suitability for a particular domain, they alone won’t cut it. You need to systematically evaluate VLMs using realistic, task-specific datasets — and let accuracy, not model size or hype, drive your decisions.

The most effective strategy is to build your application around an evaluation set, then iterate. Performance in your real-world context is what matters — not leaderboard scores.

Step 3: Prompt Engineering for Zero- and Few-Shot Learning

Prompt optimization is the most straightforward way to optimize model performance for a specific domain without changing the model itself. Just like LLMs, VLMs can be surprisingly effective in zero-shot and few-shot settings, where they’re given only a prompt (and optionally a few examples) to guide their inference. This is a powerful alternative to fine-tuning, especially in edge deployments where retraining may be impractical or impossible.

Prompts Are Part of the System

A prompt that works well for one model may completely fail with another. That’s why prompt + model should be treated as a jointly optimized system, not separate components.

Depending on your task, you’ll want to explore:

- Zero-shot prompts: No examples, just a well-phrased instruction.

- Few-shot prompts: Include a small number of task-specific examples to guide behavior.

The goal here is to maximize performance without modifying the model weights — keeping deployment simple while still tailoring the model to your use case. Popular tools for prompt optimization are OpenPrompt, DSPy, Promptbreeder, and TextGrad.

Revisit Model Selection Based on Prompt Performance

You might find that one model responds better to domain-specific prompts than others, even if its raw accuracy was slightly lower in earlier benchmarks. That’s a signal: promptability matters. Re-run your evaluations with prompts in place to decide which model performs best in practice, given the constraints of your hardware and application.

Step 4: Fine-Tuning the Model (When Prompts Aren’t Enough)

If prompt engineering doesn’t get you the accuracy you need, it may be time to reach for the heavier tool: fine-tuning. Fine-tuning modifies the actual weights of the model, adapting it more deeply to your specific domain or task. This can unlock major performance gains — but it comes with significant trade-offs in complexity, compute, and risk.

Fine-tuning is typically done in the cloud even for small models that are deployed on the edge. It requires annotated data, and lots of it. This means building or curating high-quality datasets, which can be labor-intensive. You’ll need:

- Well-defined task formats

- Clearly labeled examples

- Alignment with your downstream objectives

It’s easy to overfit — or worse, cause catastrophic forgetting, where the model loses previously acquired general knowledge during fine-tuning. That’s why evaluation (see Step 2) remains critical even after tuning.

Step 5: Model Distillation — Fine-tuning Small Models with data from the Big Ones

Sometimes the best way to make a small model smarter is to learn from a bigger one. That’s the core idea behind model distillation: use a large, high-capacity foundational VLM as a teacher, and train a smaller, edge-ready model to mimic its predictions. Distillation allows you to retain much of the reasoning power of the large model — while dramatically reducing inference costs and deployment complexity.

How It Works

In a typical distillation workflow:

- A large VLM (e.g., Open AI’s GPT-4o Vision or Anthropic’s Claude 3.5 Sonnet ) generates pseudo-labels on domain-specific data — either raw images, or carefully curated prompts.

- A smaller model (e.g. LLaVA-3B or Qwen-3B) is fine-tuned on this synthetic dataset, learning to replicate the teacher’s behavior.

- The result is a compact, performant model that’s tuned for your task — and can run on low-power, edge devices.

This process works especially well when:

- You trust the teacher model’s inferences (even if you don’t have labeled data)

- You need to deploy at scale and can’t afford to run large models in production

- You want to compress domain knowledge from a foundational model into a smaller one

Why Distillation Matters for Edge VLMs

Distillation helps bridge the gap between the accuracy of large models and the constraints of edge hardware. It’s a practical way to inject advanced reasoning into devices where full-scale VLMs simply won’t fit — without sacrificing too much performance.

By using big models to teach small ones, you’re effectively scaling intelligence downward — making high-level AI available everywhere, from drones to embedded cameras to industrial sensors.

Step 6: Quantization & Pruning

Even lightweight, edge-friendly VLMs can be too large or power-hungry for real-world deployment — especially on devices with strict memory, latency, or energy constraints. To close that gap, we turn to model optimization techniques like quantization and pruning. These methods reduce size and inference cost while preserving accuracy, making edge deployment viable without major trade-offs.

Quantization works by lowering the numerical precision of model weights and activations, typically from 32-bit to 8-bit or 4-bit. This dramatically cuts memory usage, reduces power consumption, and speeds up inference. One standout technique is AWQ (Activation-aware Weight Quantization), which selectively quantizes based on how activations behave, preserving model accuracy even at lower bit-widths. Simpler strategies like post-training quantization offer quick wins without retraining, while quantization-aware training yields better results if you have the data and compute to support it.

Pruning, on the other hand, reduces model size by removing redundant weights or neurons. It can be applied at various levels — from individual weights to full channels or layers. Techniques like magnitude-based pruning or sparsity-inducing strategies help shrink the model further while maintaining functional performance. In many cases, a small amount of fine-tuning is enough to recover any accuracy lost in the process.

Challenges & Opportunities Ahead

Deploying Vision-Language Models at the edge opens up enormous potential — but it also reveals critical gaps in today’s tooling and workflows.

1. Prompt Optimization Needs to Catch Up to Vision: Most prompt optimization techniques are designed for LLMs — assuming purely text-based inputs. They don’t account for how prompts interact with images in few-shot examples, and how that interplay varies across VLM architectures. We need new tools that jointly optimize prompt + image input for multi-modal reasoning.

2. Model Selection and Prompting Must Be Jointly Optimized: Right now, model selection and prompt engineering are treated as separate steps. But in practice, they’re tightly coupled. A prompt that works well on one model may fail on another. Future systems must treat model + prompt as a unified, co-optimized component.

3. Quantization and Model Size Trade-offs Are Complex: What’s better for your use case:

- A 3B-parameter model running out of the box?

- A quantized 15B model with prompt tuning?

- Or a 1.5B fine-tuned model trained on domain-specific data?

There’s no universal answer — only careful experimentation and benchmarking can reveal what works best. But this process is time-consuming and highly manual. We need automated tools to navigate these trade-offs intelligently.

4. Fine-Tuning Is Still Too Hard: Fine-tuning remains a high-friction process:

- It demands annotated data

- Requires expertise in training pipelines

- Risks catastrophic forgetting

- And depends on substantial compute resources

Even parameter-efficient methods like LoRA aren’t plug-and-play. We need simpler, model-agnostic interfaces for edge fine-tuning — without requiring every team to be a research lab.

5. Distillation Remains Underutilized: Distillation offers a compelling path to bring the reasoning power of large VLMs into smaller, deployable models. But it’s still underdeveloped in vision-language workflows. Tools that make data generation, teacher-student training, and evaluation easier and more integrated could dramatically accelerate edge deployment.

The Case for an Integrated Edge VLM Stack

What’s missing is an integrated, end-to-end system — one that:

- Selects and configures the optimal model for a given task

- Tunes prompts and architecture as a unified system

- Supports optional fine-tuning and distillation

- Applies quantization and pruning intelligently

- And outputs a domain-specific, edge-ready VLM with minimal friction

Visum AI is building exactly that.

Our goal is to lower the barrier to deploying intelligent, vision-capable AI in the real world — enabling VLMs to power everything from robots and autonomous systems to industrial safety and quality control. If we get this right, VLMs won’t just be the next big thing in AI — they’ll be the next essential layer of perception for machines in the physical world.